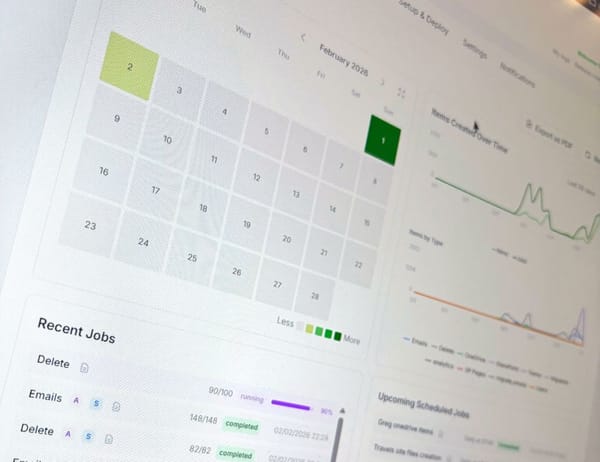

Create big fake uncompressible files (and this is for a good reason)

Sometimes, for backup performance tests or disaster recovery drills, I need a server that can take a real beating. Not your usual 40GB VM—nope, I’m talking a heavyweight 500GB to 1TB beast.

But here’s the kicker: my lab isn’t at home...

So, to load a VM, I have to send all that data through my WAN. 😥

But then, a tiny elf in my head suddenly chimes in and says "hey buddy, why not let the VM create the file itself ? And for a challenge, make it uncompressible !"

Snap ! here we go !

Let's build a PowerShell script that can create those files to fill-up this server.

# Define the path and number of files

$filePath = "C:\files" # Change this to your desired path

$numberOfFiles = 100 # Change this to the number of files you want to create

# Function to create a file with random data

function CreateRandomFile([string]$fileName, [int]$sizeInBytes) {

$fileStream = [System.IO.File]::Create($fileName)

$random = New-Object System.Random

$buffer = New-Object byte[] 10MB

$totalBytesWritten = 0

while ($totalBytesWritten -lt $sizeInBytes) {

$random.NextBytes($buffer)

$bytesToWrite = [Math]::Min($buffer.Length, $sizeInBytes - $totalBytesWritten)

$fileStream.Write($buffer, 0, $bytesToWrite)

$totalBytesWritten += $bytesToWrite

}

$fileStream.Close()

}

# Create the files

for ($i = 1; $i -le $numberOfFiles; $i++) {

$fileName = Join-Path $filePath ("RandomFile$i.bin")

CreateRandomFile $fileName 1073741824 # 1 GB

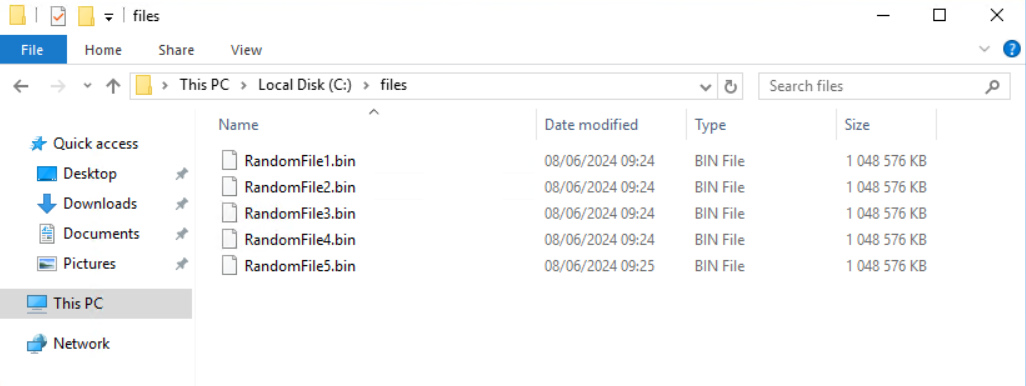

}This script will create X number of files with Y of size in the Z folder.

Where X, Y and Z are variable to change in the script.

You should see new files created to your path directory :

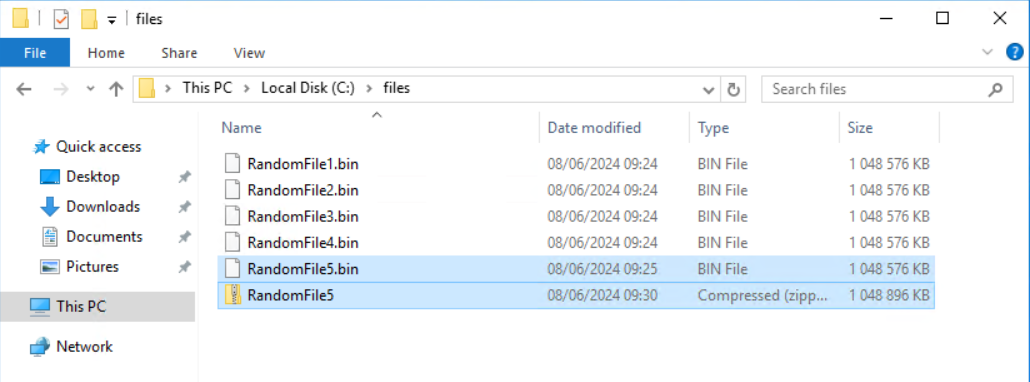

But wait, are these files compressible ? Will the backup process shrink them before sending them off to the backup destination ?

Let's try to Zip them.

Et voila ! 0% compression rate !

(It is actually heavier than the originals thanks to the Zip headers).

👍